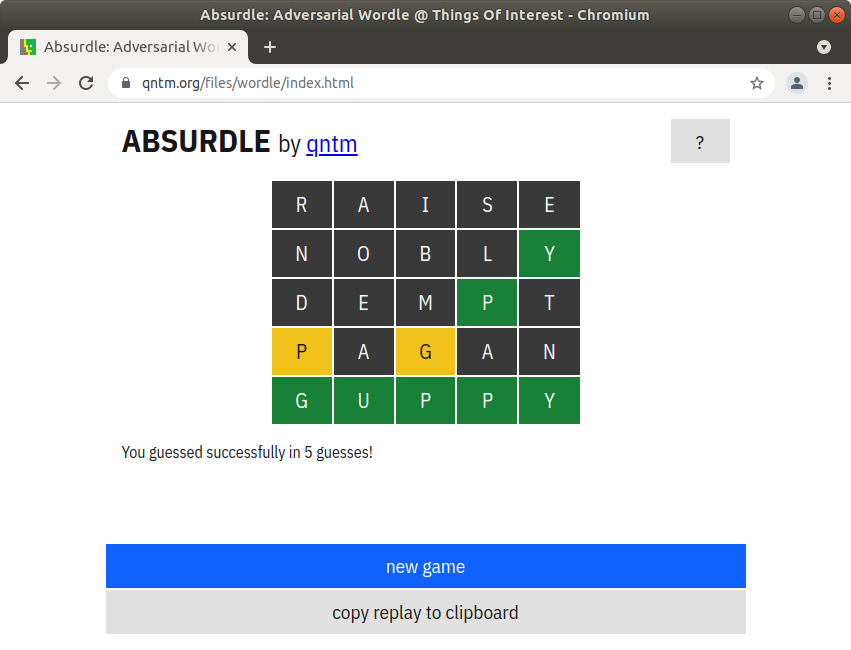

Last month, I posted a blog posted on approaches to creating an automatic solver for Wordle.

After that posting, there was also a Five Thirty Eight riddler question about the same topic by Zach Wissner Gross. In Zach’s original posting he referenced code and code and examples done by Laurent Lessard. In Lessard’s work, including this blog post Lessard ran experiments trying several different techniques for creating an optimal approach, e.g.

- Picking the guess that created the most choices (most buckets)

- Picking the guess that minimized the size of the largest bucket

- Picking a guess that maximized entropy

Experimental results suggested that the first of these was slightly better than the other two alternatives.

A week later, Zach Wissner-Gross posted the best solution received a solution credited to Jenny Mitchell. Either from Jenny or from Zach, this solution posted also turned the problem into a lookup table with each element of the tree having a designated solution. The declared victor was essentially a variation of the “maximize splits” approach

I was playing a little further with these solutions and trying to understand the search space. As discussed in previous post, each word splits the candidate mystery set into up to 238 unique buckets (3^6 minus five invalid choices) and in practice up to 150 of these buckets populated using the word TRACE. As Jenny Michell’s solution and Laurent Lessard’s experiments show, this is indeed a very good solution approach. However, I am skeptical whether it is the absolute best approach because of two reasons:

- In the context of creating a lookup-table, it is possible that different approaches could create just slightly more optimal approaches for parts of the search subtree.

- The splits themselves are also influenced by the choices of the valid guess words. There are quite a few, but it is not fully populated.

Zach made one statement in arguing for a maximizing splits approach that I am not convinced is true:

It didn’t matter how many words were in each constellation, just the total number of constellations.

To think of why, consider the following thought exercise of two hypothetical ways in which a a group of 20 candidates could be split by a guess into 10 buckets.

- One method would be word just happened to split this into 10 buckets of two elements each. In this case, the expected number of guesses would be 2.5 – one for the initial split into 10 buckets and then for each of these buckets one could either guess correctly (1 more guess) or incorrectly (2 more guesses) thus- 1 + (50% * 1) + (50% * 2) = 2.5

- A second method would be a word that creates 9 buckets of one element each, and a remaining bucket of 11 elements. In this case the expected number of guesses would be one for the initial split into 10 buckets and then all the buckets with one element could be guessed in the next guess – and the remaining ones however long it takes to split out the bucket of 16 – thus – 1 + 45% * 1) + (55% * x).

There is no inherent reasons what these two calculations have to be the same. Some notion of entropy can still play a role, even if maximizing entropy is not the best solution. Hence, I believe Zach’s statement above is a good description of the approach, but not guaranteed to find the absolute best solution.

So I created a metric that was a little more entropy based instead of purely the number of buckets or size of the largest bucket. I considered this as the “weighted size” of the bucket. In the example above, 55% of the mystery words are in the bucket with 11 elements and 45% of the mystery words are in a bucket with 1 element. So the weighted size is (55% * 11 + 45% * 1) = 6.05. This is in comparison to the weighted size of (100% * 2) = 2.0. So there is more entropy in the buckets that are all split out, than one that is still more concentrated in a larger bucket.

Note: That in general, I would guess that a lower weighted size should help – however, interestingly enough a bucket of size 2 is likely among one of the least efficient choices – so higher entropy might not always be the best solution in some of these small cases.

When I print a list of the top 50 choices that minimize this weighted size, I can also print their number of buckets as well as the largest bucket sizes in the table below:

num word bucket max ones wsize eguess

1 roate 126 195 23 60.42 3.31

2 raise 132 168 28 61.00 3.28

3 raile 128 173 22 61.33 3.28

4 soare 127 183 22 62.30 3.24

5 arise 123 168 26 63.73 3.26

6 irate 124 194 14 63.78 3.28

7 orate 127 195 28 63.89 3.30

8 ariel 125 173 21 65.29 3.33

9 arose 121 183 23 66.02 3.30

10 raine 129 195 27 67.06 3.24

11 artel 128 196 27 67.50 3.27

12 taler 134 196 24 67.74 3.29

13 ratel 134 196 25 69.84 3.29

14 aesir 116 168 32 69.88 3.33

15 arles 108 205 14 69.89 3.34

16 realo 112 176 20 69.95 3.36

17 alter 128 196 25 69.99 3.31

18 saner 132 219 33 70.13 3.26

19 later 134 196 34 70.22 3.33

20 snare 132 219 32 71.10 3.18

21 oater 128 195 38 71.25 3.38

22 salet 148 221 34 71.27 3.22

23 taser 134 227 31 71.28 3.26

24 stare 133 227 23 71.29 3.19

25 tares 128 227 26 71.54 3.24

26 slate 147 221 29 71.57 3.22

27 alert 131 196 25 71.60 3.24

28 reais 114 168 24 71.61 3.35

29 lares 118 205 22 71.74 3.33

30 reast 147 227 29 71.77 3.15

31 strae 125 227 16 71.85 3.19

32 laser 123 205 27 72.12 3.33

33 saine 136 207 25 72.59 3.25

34 rales 117 205 21 72.80 3.34

35 urate 122 202 25 72.83 3.31

36 crate 148 246 30 72.90 3.17

37 serai 110 168 20 72.92 3.30

38 toile 123 204 20 73.04 3.23

39 seral 128 205 24 73.08 3.17

40 rates 118 227 24 73.33 3.30

41 carte 146 246 30 73.52 3.21

42 antre 134 226 31 73.94 3.25

43 slane 133 225 25 73.99 3.19

44 trace 150 246 32 74.02 3.16

45 coate 123 192 22 74.51 3.22

46 carle 144 249 37 74.68 3.23

47 carse 139 237 26 74.83 3.20

48 stoae 110 177 18 74.90 3.26

49 reals 116 205 21 74.94 3.27

50 terai 113 194 17 75.14 3.27

The word “TRACE” is 44th on this list, even though it has the highest value for the number of of buckets in column #3. Similarly, if you instead want to pick the words with lowest maximum bucket size, a word like RAISE is lowest in column #4 but numbers in this column are not always the lowest expected weighted bucket size.

I am not convinced that a increase the entropy situation uniformly is best choice, any more than I am convinced a maximize buckets or maximize spread is optimal for the entire search tree. Instead, I think it can be slightly influenced by subtleties of particular trees as well as counts.

Looking at weighted numbers of guesses, recursively

To look at potential approaches and expectations, I decided to look at this inductively. In the end, we are creating a search tree, where the tree is composed of nodes ranging from one or more elements. What might be the expected number of guesses for these nodes and how can the be accumulated recursively?

- One of the bottom building blocks are nodes with one element. These are also listed in column #5 of the table above under the heading “ones”. There are not very many of them near the root of the tree, only 32 of them at a first level guess of TRACE or ~1% of the 2315 cases. If you are fortunate enough to reach one of them, it takes one more guess to confirm. However, as you go to lower levels of the tree, I also expect the percentage of buckets with one element to increase.

- Another building block can be an node with two elements. A described above, one can flip a coin get the right one ~50% of the time, so expected guesses are 1.5.

- Next are nodes with three elements. An average expectation could be to guess this in two guesses – using one of two possible approaches. Either you try the choices in this bucket one at a time and (1/3 * 1) + (1/3 * 2) + (1/3 * 3) and have expectation of 2 guesses to solve – or you find something that splits the three choices into two and pick in the next guess. If you are lucky one of the two guesses might split the other two if incorrect.

- Nodes with four or five elements might be even more “efficient” than nodes with three – since there is a larger probability of having a word that can split these into exactly four or five choices. At least this is what I’ve observed.

- As the number of elements within a bucket increases, one gets into more situations where the problem instead gets solved recursively. Find the expected guesses and then break the problem up recursively.

So I created an example that created a search tree in this more dynamic fashion. When the node has only two elements, don’t find a pick that evenly splits it into two buckets to maximize the split (or to minimize the bucket size) – but instead try guessing one of the choices and if that wasn’t correct – pick the other. Hence, using a strategy with a 1.5 expected count rather than 2 expected count…

I did this recursively, and when a node had too many elements in it, then rather than an exhaustive search for the absolute best candidate – I used a rough heuristic (wsize in my case, but it could have been number of buckets or size of largest bucket) – and tried the best candidate. I think my rough heuristic can do a reasonable job – but I don’t claim to be absolutely optimal because there is always a chance that further down on the list might just be slightly better.

Looking at the table above, I notice that TRACE does pretty well within this overall list if used as a first choice, even if followed up later with a different heuristic. I am also struck that there is a word REAST at number 30 on the list that might be even slightly better as this first choice, even though it is not the best choice in terms of other metrics like maximal number of splits, smallest bucket size or weighted bucket size.

Instead, REAST looks like it might have almost as many buckets as TRACE (247 vs 250) but then also a slightly smaller maximum bucket size (227 vs 246).

Summary

I am not claiming that I have found the best solution for solving Wordle.

Instead, I am suggesting that other claims of ideal might not as strong. With a lookup table approach, I do think one could find a case that optimizes. However, rather than a single heuristic for creating all of that table, it may be the case that parts of the search tree use slightly different heuristics.

In the table above, the last column eguess is an estimated guess derived recursively and weighted by the number of elements in each node along the way. There is possibility my calculations are slightly off, but I’ll also observe numbers slightly lower than those presented in FiveThirtyEight.

I expect the largest form of discrepancy is for nodes with two elements. A naive approach of “maximize spread” solves this in two guesses, whereas an approach of try one and if it isn’t right try the other solves it on average in one and a half.

The second potential discrepancy isn’t as strong but comes from examining expectations for REAST vs. TRACE. These might suggest that it is possible to pick particular choices optimized for different parts of the tree (in this case the initial guess, but it could also be lower down). Undoubtedly maximizing spread and minimizing largest buckets are good things to do and lead to strategies that perform very well. It is with that step after that of claiming “ideal” that I am questioning.

Two general notes made in the last blog post also apply. First, this experiment was done in the context of a search tree for exactly the 2315 possible words in Wordle. I expect the general techniques to still apply but results to be different if picking a larger set such as ~40,000 words in Google corpus that are five letters long.

Second note is that the way systems solve Wordle are different from how people solve Wordle. My observation is people often try to find initial letters to work from and go from there. Whereas a system it is a combinatoric elimination problem. This was reinforced by Wordle for 14 February where chosing REATH followed by DOILY narrowed the space to a single word in a way I’m sure I would still have no clue…